Monday, October 17, 2011

Handling historical data with SQL - Common errors in datawarehousing

It has been a while since my last post. Here is an old post I put here because it comes up in mature enterprise data warehouses. Two easy points that are too hard to put in Power Point, but easy enough to take care of in the warehousing solution. Problems are intervened.

1) GETDATE() used directly

In MS SQL Server GETDATE() is nondeterministic which is suboptimal when it comes to performance and schema binding.

2) BETWEEN is used

BETWEEN is an inclusive operator, which means that one can't really step with it the way I see it. Say you step one day at the time, you would have to use for date d: "x BETWEEN d AND d+.9999999" or risk having

a) the same data at two consecutive dates

b) two historic versions for the same BK

to give an example, for

- 1000002; "1901-01-01 00:00"; "2009-10-24 00:00"; "1234"; "Helly hansen";...

- 1000003; "2009-10-24 00:00"; "2049-12-31 23:59"; "1234"; "Helly Hansen";...

the query "d BETWEEN Invoice.ValidFrom AND Invoice.ValidTo" would for d="2009-10-24 00:00" give both rows, which should crash the ETL job or pollute the result.

3) One usual practice

For this reason some use between statements like

"d BETWEEN '09:00:00.0000000' AND '16:59:59.9999999';"

or

"d BETWEEN '09:00:00.0000000' AND '16:59:59';"

which might be working as long as you are absolutely sure that this will not be cast, or it might be rounded up, which is even worse (looks like it works but don't).

SELECT CAST(N'2009-12-12 16:59:59.199' AS DATETIME) -> "2009-12-12 16:59:59.200"

4) The simple solution

"d >= '09:00' AND d < '17:00';"

will work no matter what data type is used and therefore is more portable, I think.

So, now that’s documented

Have a nice one,

Gorm Braarvig

Sunday, April 17, 2011

Friday, March 18, 2011

"Domain Driven Design" and "Information Islands"

This way you create an information Island bound on the application layer!

The integration work between these systems, as they grow in fast iterations (say Scrum-sprints) will surely be huge, and the "single version of the truth" across the value chains will be a distant dream, evens when it comes to central dimensions like "Customer" and "Product"!

Will be exciting to see the solutions to this, so far I have heard

- Shared Kernel

Well... you need it in the database to solve all the integration points, you can't expect ETL and EAI to use an application layer that changes all the time, you want access to the databases in most cases. Many reasons for that across scalability, security, ease of implementation and testing etc

- Anticorruption Layer

Well, in a siloed system this would in my mind include context tables on every single shared entity, which I haven't really seen samples of yet, I expect this is not thought through. Might be promissing.

- Explicit model delimination

I didn't understand the consept, which tells me that it might be too complex for a simple common problem. The solution shouldn't be so much more complex than the problem

I believe the DDD is great for Cabin-rental systems and Access card systems, but the SOA-solution? Well prove that you can handle a strategy of the common version of your data. I personally like to be threated as the same identity across the functions of the companys I interact with...

...this is a reason for working on MDM (Master data management).

just my 2 cent

have a good one

/G

Sunday, November 14, 2010

Attempting to program C++ once more

I have been trying (for a few hours) to create a good checksum function using C++ and found a nice MD5 implementation I thought would be a nice starting point.

The business case for this is that I do enterprise data warehousing and fast hashing combined with streaming (in concert with loading and smart indexing) is a way of solving all hard performance problems I have seen.

Compiling a DLL and using it in SQL Server (SQL Server 2011 CTP1) was harder than it should, I actually need to either…

- find an installation of SQL Server 2005x64 and steal a lib-file or

- use LoadLibrary and GetProcAddress (which is a lot of work)

…to be able to do this.

This is sad and unacceptable, I will have to abort and be happy with the SQL CLR’s I have created already. Sub-optimal because

- the number of parameters can not be variable and

- performance is, of course, not as good as what I can do with native code

I haven’t given up but need to devert solution until I write a desent parallell bulk-loader, I think,

have a good one

/G

Friday, July 2, 2010

Excel: read data from stored procedure, with great difficulty

- Restarting Excel

- Restarting my computer

- Reducing my environment

- Reducing my code

- This finally gave me a positive result

- This finally gave me a positive result

Use "SET NOCOUNT ON" in my Interface procedure (it calls other procedures, but these does not need NOCOUNT.

Here is a simple (working standalone) sample for SQL Server 2008 and Excel 2007/2010:

<tsql>

/* Create some temp db */

CREATE

DATABASE tvfTest

GO

USE tvfTest

GO

CREATE

TYPE TTable AS

TABLE (SomeKey INT, SomeValue SYSNAME)

GO

--*/

/* Create "Internal" proc that takes tvp */

CREATE

PROC Internal @t TTABLE READONLY

AS

SET

NOCOUNT

ON

-- Not imnportant here, but removes extra noice.

SELECT SomeKey=0,SomeValue='Zero'

UNION

ALL

SELECT

*

FROM @t

GO

--*/

/* Create "Interface" proc that uses tvp and return result set */

CREATE

PROC Interface @test INT=0 AS

DECLARE @t TTable

IF @test=1

BEGIN

INSERT @t VALUES(1,'One')

-- Fails (because rowcount is returned and it indicates that write operation performed?)

END

ELSE

IF @test=2

BEGIN

-- Same as above, but "SET NOCOUNT ON" helps avoiding error!

SET

NOCOUNT

ON

INSERT @t VALUES(1,'One')

END

EXEC Internal

@t

GO

--*/

/* Call with tvp and test */

EXEC Interface

0 -- 0,Zero -- Command(s) completed successfully.

EXEC Interface

1 -- 0,Zero;1,One -- (1 row(s) affected)

EXEC Interface

2 -- 0,Zero;1,One -- Command(s) completed successfully.

GO

--*/

/* Map up in Excel */

</tsql>

<excel>

| Without insert | |

| SomeKey | SomeValue |

| 0 | Zero |

| With insert without NOCOUNT | |

| SomeKey | SomeValue |

| With insert with NOCOUNT | |

| SomeKey | SomeValue |

| 0 | Zero |

| 1 | One |

<excel_description>

Add three external TSQL connections with command type SQL and commands:

- EXEC Interface 0

- EXEC Interface 1

- EXEC Interface 2

</excel_description>

The middle one (Interface 1) should not work because it returns a non-empty "rowcount"!

I do not know if the bug in Excel is by design to lower the danger of SQL Injection attacks or because of a suboptimal data retrieval function.

Have a nice day

/G

Monday, May 24, 2010

Enable host to image network in Hyper-V

Hyper-V, while better than the alternatives, has some short comings when it comes to the client; copying files is hard and screen support is not ideal.

That is why I prefer using the host to access the image through the network stack with Remote Desktop or just the browser.

Here are the steps to set up the communication:

- Add a virtual adapter

In Hyper-V this is accomplished on the host in "Virtual Network Manager" by adding an "Internal" network I usually name "example.com" - Set up adapter inside the image

Ensuring the image is shut down (and not in save state mode) I add "Network Adapter" and select the net created in step 1.

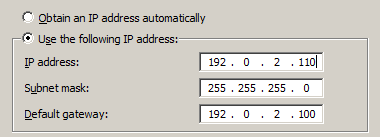

After restarting the image I open "Network Connections" and set the IP to 192.0.2.110, mask 255.255.255.0 and optionally default gateway to 192.0.2.100.

Last I turn off firewall completely for this card (steps depends). - Set up adapter on host

On "Network Connections" I set up the adapter manually with IP 192.0.2.100, mask 255.255.255.0. Advanced: if I have AD inside the image and the host is part of the domain I set DNS server to 192.0.2.110.

If I don't use DNS in an image (or the host) I change the "hosts" file (or lmhosts) with notepad c:\Windows\System32\drivers\etc\hosts and add lines for the names we want the inside of the image to be known, for instance:- intranet.contoso.com 192.0.2.110

- www.contoso.com 192.0.2.110

- contoso.com 192.0.2.110

- contosoApp 192.0.2.110

- Test

In host start cmd and "ping 192.0.2.110"

In image start cmd and "ping 192.0.2.100"

From host start mstsc and open "contosoApp"

From host start iexplore and navigate "intranet.contoso.com" - Result

I now browse the image from the host and use remote desktop. This way screen works better and browser app (and Visual studio etc) works much much better.

Friday, November 13, 2009

SQL Server 2008 R2 MDS - Microsoft MDM Solution

Two hours effective work was enough to

- install the product (including reading how to do it),

- installing IIS on Windows 7 (you need a modern advanced OS)

- implementing security

- adding a model (PoC) with a hierarchy

- reading most of the documentation

Books on-line is sparse, so I haven't really done a real walk-through yet. I hope a tutorial is coming soon, or I might post one here (after getting to know the thing better).

Wonder if I can run a hybrid model where I can have some entities work as in the "registry"-model, and some as in the "Central Master Data Repository"-model.

I would like to put all my meta data into this thing, hope it is mature enough.

I went to a couple of sessions on SQL PASS, but they were introductory, can't be many that have experience with this thing.

Anyhow, have a nice day.

G.

Tuesday, November 3, 2009

Performance hit for SELECT because of fragmentation?

Q: Is there a performance hit on the "select"-side because of some fragmentation of the data base files caused by auto growth?

A: No. But you might want to enable "Perform volume maintenance tasks"

Question details

We have a generic script-based deployment of many data bases, and therefore have auto-growth on them, so they are created in exactly the same way. There are a total of ten data bases with twelve files each, so 120 files. The files all start at 100MB and grow by 25%. To give each data base special treatment makes changes more expensive (We charge by the hour).

Solution details

1) Keep scripts as is.

2) Enable "Instant Initialization"

a) open a command window and run "sc qc

b) note the user name from "SERVICE_START_NAME" attribute.

c) Fire up ocal policy editor, start -> run -> gpedit.msc

d) navigate to "Computer Configuration\Windows Settings\Security Settings\Local Policies\User Rights Assignments", open "Perform volume maintenance tasks"

e) add the user from step b)

f) restart your sql server instance (not sure if this is needed, but seems a good idea)

and thats all,have a nice day

btw:I will not mention the name of the expert that helped me, because the person can not make public advice because of legal reasons. And things might have been distorted by me translating the advice into this post.

Thursday, September 17, 2009

Handling history failures in data warehousing

I work with Business Intelligence and use slowly changing history (wikipedia) extensively. We have a multi-layered topology and use multiple modeling techniques, including "data-vault", "star-schema" and relational model.

The way we implement history…

- It requires one or more identified unique key fields in every source system table

- One row in the original source system initially produces two rows in the destination (for each layer in the topology layer)

- Change in any field in the source produces a new row in the destination

- Deletes in the source creates new rows in the destination

This way a row in the source system can easily be ten or more in the data warehouse. With modern hardware this is not really a problem, even if we get into the terabytes.

Problem

The problem comes when we get errors in the history-mechanisms. A typical problem can be that one static row in a source system could result in a new row in the destination for every load even if there are no real changes.

If introduced in an early layer, this will spread like the plague in the warehouse (and not like bird flu, either).

These errors take time to solve and requires expensive talent.

Solution

We now have a set of functionality that automatically fixes things up and centralize any misbehaving data in our meta data repository or elsewhere.

Result

We can use the talent to solve more problems interesting to the customer and be less proactive (more execution-focused and reactive) on this technical problem because problems will not grow severe.

; )

I find data warehousing pretty nice, since there is untouched ground here and there with great promise. Of course, the technical side of these projects is not necessary the hardest to deliver on, but it would be sad if it was the show stopper.

I also like some metaphors used in the sport, especially the time line (I'll use it next time).

Wednesday, August 12, 2009

SQL Server 2008 R2 CTP / Windows 7 x32 – Initial experience

No problems.

Hardware is Virtual PC image on Vista/64 laptop.

Setup is

- No UAC

- 1GB RAM for the image

- 10 logical disks on three image files, 64Kb cluster size for data drives

- Separated prog files db files, temp files and log files

I installed SQL Server engine and Integration services and tried some complex SSIS packages created for 2008. I use

- custom data flow components

- script based deployment

- hierarchy of packages (packages calling other packages)

- custom Meta Data Repository

- advanced configuration

- SQL CLR TVF

- …other potentially problematic things

Packages implements ETL for an enterprise data warehouse.

Things seem to work as is, product looks better than I expected. I get a RC feeling using this thing, if only things performed in the virtual environment, but it still does not, all GUI tools are IMO slow, but this might be because of fragmentation on disk holding vhd. I'll fix fragmentation and have another look. I'll also try an ongoing "appliance project" I have, that demands compatibility with on many parts of the internal SQL Server meta models.

I have a good feeling with the new SQL Server.

That's it; have a good one,

Gorm

Monday, May 25, 2009

The shortest useful program I have written: %1

This is the shortest program I have written, it is two bytes:

<ExecuteCommand.cmd>%1</ExecuteCommand.cmd>

The task of this program is to execute the parameter as a program, I use this inside Visual Studio on the "Open With"-menu to call cmd-scripts inside the GUI.

The only program that comes close to this is a 5-byte boot program I wrote for 8086 assembly about twenty years ago.

Saturday, March 7, 2009

Get gigs of disk space with a single command in Windows Server 2008R2 (and many others)

powercfg.exe /hibernate off

This will remove your hibernation file, which, in 2008 server makes no sense anyway. Gains the amount of disk space as you have installed RAM. I just got another 4GB with this command, and feel like starting a defrag.

Enjoy, Gorm

Friday, July 25, 2008

Hyper-V on the laptop (Compaq nc6320)

I want to run Hyper-visor and other software (SQL Server 2008) on my laptop. I consider running Team Foundation Server, Windows XP, SQL Server versions, old Visual Studio versions, Visio, BizTalk and others inside Hyper-V images on my laptop. At the same time I want the laptop to function as normally (internet explorer, media player, office, etc).

Hyper-V runs on 64-bit Windows Server 2008. The machine needs to support hardware-assisted virtualization and data execution prevention, and these must be enabled in BIOS.

- Download driver packages for Vista x64 and save on flash-drive

- Install Windows Server 2008

- Rename computer

- start control userpasswords2 and enable automatic logon

- Install drivers and enable wireless service (not automatic on server)

- Install Intel Graphics sp37719

- Install "Integrated module with Bluetooth wireless technology for Vista" SP34275 don't care if it says "no bluetooth device"

- Replace wireless driver: \Intel Wireless sp38052a\v64

- Add feature "Wireless..."

- Install Intel Graphics sp37719

- Connect to internet

- Start updates

get updates for more programs

automatic

important updates, only - View available updates

Add optional: Hyper-V - Install Hyper-V

- Add role Hyper-V

The machine is now a Hypervisor. Next steps are to get Windows Server 2008 to act more like a client OS.

- Install other drivers

- SoundMAX SP36683

- SoundMAX SP36683

- Install Feature "Desktop Experience" (Media Player, etc)

- Create shortcut "net start vmms" for starting Hyper-V and "net stop vmms" for stopping Hyper-V

- Set startup for service "Hyper-V Virtual Machine Management" to manual

- stop vmms

- Install Virtual PC 2007 (in case I want wireless network from a vm)

- Enter product key and Activate windows

Since I have a working version of Windows, this is the time to activate, next step would ideally be to install office. I don't have office handy (am on vacation), so I install the viewers for Word, PowerPoint and Excel

I am now ready to install Visual Studio and the servers, first out is SQL Server 2008 rc.

- Set up IIS (with all the iis6 stuff)

- Set up Windows SharePoint Services 3.0 x64 with Service Pack 1 to run "stand-alone"

- (optionally) set up Windows SharePoint Services 3.0 Application Templates – 20070221

- Create user ServiceAccount to run all the sql server services

- Install SQL (complete) with RS in SharePoint integrated mode

- Open firewall (http://go.microsoft.com/fwlink/?LinkId=94001)

open tcp 1433 (engine), 2383 (ssas), 80 (sp), 135 (ssis), 443 (https), 4022 (service broker)

udp 1434 (ssas)

and "notify me when new program blocked"

Now get all updates.

Next step is to install Visual Studio, which should be straight-forward.

OK, I'm off to the beach,

Gorm

Tuesday, July 15, 2008

CMD: redirect error output

I write quite a few batch-files these days and want the error messages to be supressed. I had a vague recollection telling me that this was possible, and found it googling, so here it is:

"2>nul".

In a sample:

Del *.log /f /q 1>nul 2>nul

Deletes all log-files, hiding output (1>nul) and error output (2>nul). If I remember correctly (3>nul) was hiding printer output. In the old days. You can, of course redirect to files as well:

Del *.txt /f /q 1>out.txt 2>err.txt

Del *.log /f /q 1>>out.txt 2>>err.txt

This sample is supposed to put all the output in out.txt and all the errors in err.txt.

Gorm

Sunday, July 13, 2008

ETL top-down 1 – Architecting abstraction layering

I work at a very exciting BI-program where we have been able to do things right. The project is part of a larger program that contains Business Consulting, Master Data Management and Business Intelligence and, who knows, maybe even Information Lifecycle Management.

My work on the project includes ETL on the Enterprise Data Warehouse, a central project in the program.

My trade is, and has for some time been architecting developer. This gives me advantages early in the ETL process when it comes to what I like to call "Abstraction Layering"

Abstraction Layering – customer perspective (why)

Abstraction layering helps to set the balance between "keeping things open" vs. "delivering as soon as possible".

For my current project we need to deliver quickly while handling a few issues (from the top of my head):

- Loosely defined long term goal – I think

- Distributed developers, both geographically, and experience-wise

- Many source systems

- Large master data management and other projects changing the environment

- Real-time

- Right time

- Traceability

When implementation is done, we need to focus on simple measurable tasks. One way to do this is to model the work on well defined levels of abstraction. This way we can design top-down by having the most abstract discussions first, then some intermediate discussions and lastly the implementation details.

Things we do interesting to ETL includes:

- Selecting reference architecture.

Master Data Management, Hub and spoke EDW with 2G, full archive of source systems, data marts, custom Meta Data Repository. - Create ETL "horizontal" layering – interfaces and documentation.

Packages take data from one architecture layer to another, grouping functionality and enabling measurability. - Create ETL "vertical" layering – restrictions and grouping.

Jobs uses "job packages" uses "aggregated packages" that groups functionality in measurable chunks. - Specify update intervals and delivery.

We plan for nightly job, hourly job and real-time job. Monthly, weekly reports, operational BI and more. - Define deployment, operations, etc.

Operations implements ITIL, we should interface with it as it matures.

We deliver.

Abstraction Layering – architect perspective (how)

Architecting abstraction layering is done to serve the data integration projects by empowering a few roles, these includes:

- Project manager

Work breakdown structure gets easier because one for any integration task have some nice metaphors. - Developer

Gets assignments with a predictable scope. - Tester

Can reuse tests because many atomic items has the same scope.

The architect gets a bag of expression for reuse in the modeling of all the ETL jobs and test templates. It gets possible to create templates for kinds of functionality used often or placeholders for functionality other systems depends on.

Abstraction Layering – project manager perspective (what)

The developer lead gets measurability and some nice metaphors from the abstraction layering, in our current project they are

- Job

Roughly equivalent to executable, e.g.: "Nightly job". - Agg

Typically one for each of the different states a job goes through, e.g.: "Source extract" - Fun

Specific function for an entity, e.g.: "Extract <<customer tables>> from source system X" - Task / Tsk

Task is part of a function, it moves, changes or collects data. Data in the warehouse knows where it comes from by reference to a such task id, a sample task might be "Extract customer address from source system X".

The project manager must choose when these metaphors are appropriate, of course.

Abstraction Layering – developer perspective (what)

When assigned a task a developer can by the name of the delivery see how it fits into the wide picture on three dimensions

- Job/Agg/Fun/Tsk

Dictates the level along the low-level to high-level axis. - Context

Horizontal layers in the architecture touched, for instance SourceDsa or DmsaDm. - Function

Typically the 'T' in "ETL".

Most work repetitive by nature should have current templates controlled by the architect.

Abstraction Layering – test perspective (what)

Too early to say, but it looks like a combination of the abstract layering, "data haven" and MR shall make test-driven development beyond simple testing possible. Looks like integration and performance testing shall come relatively cheap.

Fading out

OK, this grew pretty long, looks like I'll have to do more on parts of this later, with more concrete samples. Hope I find the time.

G.

Thursday, May 1, 2008

Business Intelligence VPC Release 6

This is very nice indeed. You need at least 2GB RAM and you really want at least 3GB.

• | |

• | |

• | |

• | |

• | |

• | |

• | |

• |

1) Download and unpack (by running the .exe). This image runs fine from USB disk (for me, anyway), I never use compression (some do).

2) Download and install Virtual PC 2007 (google is your friend)

3) Configure your hardware (turn on virtualization in BIOS if you can, and the protection flag too)

4) Create your own .vmc file (choose new vm in Virtual PC), set RAM size to about 2/3 of your total RAM and remove network adapter. I'm told that the amount of RAM should be dividable with 64M, can't really back that up with knowledge or measurements, but I do it anyway.

5) Run the image

6) Log on with "Administrator" and "pass@word1", remember that you have English keyboard in the image (I use Norwegian on the mother system, so " becomes @...)

7) Enable auto logon (see older post).

8) Disable System Event Tracker (older post)

From now on just turning on the image will get you ready to play with…

Presenter Scripts

Business Intelligence "All-Up" Presenter Script

Financial Analyst

VP of Sales

Sales Representative

Chief Financial Officer

Data Analyst

2007 Microsoft Office System Launch Demo

Data Mining Deep Dive

Enterprise Manufacturing Intelligence Demo

Banking Demo

Federal Government Demo

State & Local Government Demo

Healthcare Demo

Oil & Gas Demo

Retail Demo

Project REAL Sample Demos

SQL Server 2005 Demos

PerformancePoint Launch Demo

PerformancePoint Budgeting Demo

PerformancePoint CRM Demo

PerformancePoint MAP (Monitoring, Analytics, and Planning) Demo

PerformancePoint Planning Demo

PerformancePoint Planning Consolidation Demo

PerformancePoint Management Reporter Demo

PerformancePoint Sales Forecasting Demo

PerformancePoint Strategic Planning Demo

OfficeWriter Demo

PerformancePoint Hands-On Labs

New Account Load Demo

Applications Installed

Windows Server 2003 R2 Service Pack 2

Internet Explorer 7

Office Enterprise 2007

Office Visio 2007

SharePoint Portal Server 2007

SQL Server 2005 Service Pack 2 (w/ SSIS, SSAS, SSRS)

SQL Server 2005 Data Mining Add-ins

SQL Server 2005 SharePoint Integration Add-in

ProClarity Analytics Server 6.3

ProClarity Dashboard Server 6.3

ProClarity Desktop Professional 6.3

ProClarity Web Professional 6.3

PerformancePoint Planning Server 2007

PerformancePoint Planning Add-In for Excel

PerformancePoint Planning Business Modeler

PerformancePoint Monitoring Server

PerformancePoint Monitoring Scorecard Builder

OfficeWriter for Word Plug-In

OfficeWriter for Excel Plug-In

Project REAL Reference Implementation

Business Intelligence Metadata Samples

Sample Databases / Cubes

AdventureWorks

AdventureWorks_Mfg (for Lean Manufacturing)

AdventureWorksDW (for AdventureWorks data warehouse)

Camden (Local Government)

Contoso (for PerformancePoint Planning)

EP

Government_AppDB (PerformancePoint Planning)

HC

Healthcare_AppDB (PerformancePoint Planning)

OSS (Healthcare)

PDW (for PerformancePoint Planning)

PeoplSoft Financials_AppDB (PerformancePoint Planning)

PPS Launch (for PerformancePoint Planning)

PSFIN_Data_Mart

REAL_Source_Sample_V6 (for Project REAL)

REAL_Warehouse_Sample_V6 (for Project REAL)

Personally I'll jump on "Business Intelligence Metadata Samples" first (as it is most relevant to what I do now). Hopefully I'll blog more about that later

Enjoy until August 17. 2009

Gorm Braarvig

Enable auto-logon

Enabling automatic logon for Windows can be done in two ways (that I know of) for installations connected to workgroup and one way for installations connected to domain.

This works for Windows XP, 2003, Vista, 2008 and most likely NT 4 and Windows 2000. This will not work for Windows 9x/ME.

1. The mouse-clicking way (should work with workgroup installations, only)

Start -> Run -> "control userpasswords2"

Opens up a GUI where you should be able to turn off "Require users to log on".

This also works on Windows Server 2008 Core.

2. Edit registry

This can be done with regedit.exe or reg.exe (Start -> Run -> "cmd /kreg.exe").

The values to change or add are under:

HKLM\Software\Microsoft\Windows NT\CurrentVersion\Winlogon

They are:

AutoAdminLogon REG_SZ "1"

DefaultUserName REG_SZ "Username"

DefaultPassword REG_SZ "Password"

DefaultDomainName REG_SZ "Domain or machinename"

One easy mistake to make here is to define AutoAdminLogon as REG_DWORD (if you are used to program against registry, that is): This will not work; AutoAdminLogon should be of type REG_SZ.

Obviously you should replace "Username" with a username and "Password" with a password.

"Domain or machinename" should be replaced with name of machine if you log in with a local account (always if you use workgroup) or shorthand domainname if you log in to a domain.

Warning: Your system administrator might not be impressed with having your username and password here. Always adhere to company policy in this regard.

Remember that if your machine often fails with blue screen, power failure or disk removal (typical when you run virtual from external drive and disconnect the drive), you want to disable the shutdown event tracker warning, see post bellow.

Regards,

Gorm Braarvig

Disable shutdown event tracker warning

Problem: unwanted display of shutdown event tracker

Working with vpc images, I some times turn off the images without caring about "clean" shut down. This enforces a warning the next time I boot. Since I some times use autologon (later post), this is annoying.

Solution: Disable "Display Shutdown Event Tracker"

1. Start "Group Policy Object Editor"

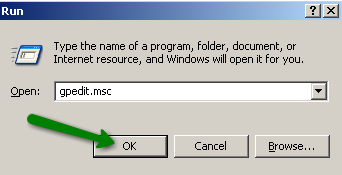

Start -> Run -> gpedit.msc:

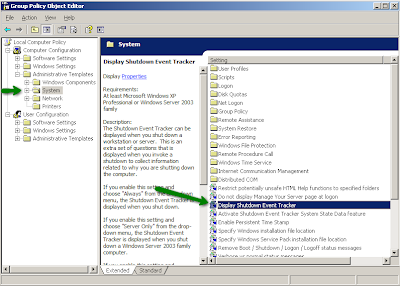

Navigate to the setting:

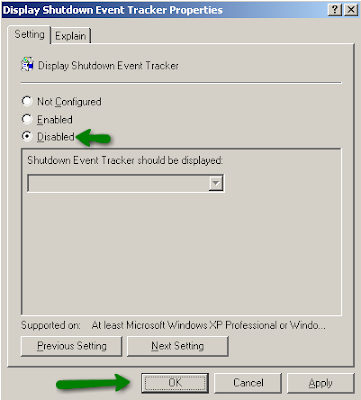

Select "Disabled" and press OK:

Result: "Shutdown Event Tracker" is not displayed anymore.

Gorm Braarvig

Tuesday, April 29, 2008

Coconuts and seeded grapes

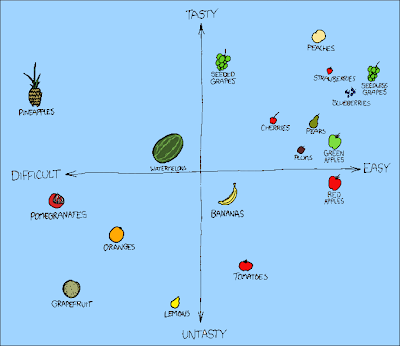

If you can't see the picture: stop reading, you are wasting your time.

img src=xksd.com

I used to do "custom bi", creating my own import programs (in a multitude of languages with more technologies than I care to recall), using Excel and print outs for analysis.

I now do Microsoft BI.

Loose analysis of Gartners quadrant should place custom BI close to coconuts (bellow to the left of the visible range) and MS BI close to the seeded grapes (moving towards peaches).

Gorm Braarvig

SQL Server - Drop database, drop current connections first

Hi!

I write scripts to generate my database, I want my scripts to run from command line using SQLCMD and (at the same time) use SSMS to edit the scripts and some times run them there. This creates a problem when dropping databases. Databases will not be dropped while they have open connections.

The solution is easy; here goes:

ALTER DATABASE x SET SINGLE_USER WITH ROLLBACK IMMEDIATE

In a sample:

PRINT 'Recreate database...'

IF EXISTS (SELECT name FROM sys.databases WHERE name='

Some_DB')

BEGIN

ALTER DATABASE Some_DB SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE Some_DB

END

GO

CREATE DATABASE Some_DB

GO

Gorm Braarvig

Saturday, April 26, 2008

SQL Server 2005: High definition timer (technical)

Timing with extreme precision

I) Preface

I played with writing a generic metadata repository (prototyping) to prepare for ETL at Nortura. I'm not doing the meta data design, but want to understand what we talk about, and to get some experience it's one of two ways I could think of doing it:

- Adaption - "Monkey see monkey do": Watch other do stuff and try to copy the submodalities (did I write this)

- Brute force - "Trial and error and success": Try, fail, try again, fail a little less, try again, succeed.

I want to do both.

a) Brute force

I started with the hands-on and created a generic meta data table with support for versioning (of the model) and hierarchy:

(

GenMetumId

,GenMetumType

,GenGrupperendeMetumId

,GenMetumForrigeId -- GenMetumPreviousId

,GenMetumGyldigFra -- GenMetumValidFrom

,Navn -- Name

,EkstraXml

,StartTid -- StartTime

,SluttTid -- EndTime

,Suksess -- Success

,Nyeste -- Newest

)

then I created some stored procedures and tried to insert some rows and update them with status etc. surprise surprise:

The timestamps were mostly equal!

BOL reveals that datetime has a resolution of some 3 ms, but this does not account for the results I got. My resolution was 10-15 ms, which to me is an etarnity (I used to do low-level stuff)

This needs to be fixed. By me. Off hours. (as in hobby).

See solution in point II).

b) Adaption

I am so lucky that I am to implement ETL on one of Europes largest BI initiatives, so I'll get to be good with operational or technical meta data (I'm not versed in the jargon, yet)

II) Implementation (my favourite)

(I know design preceedes implementation in real life, but we're playing here)

a) A better .NET "DateTime.Now"

I know DateTime.Now is sufficient (10M ticks per sec). but we are playing, so let's do it properly.

WINAPI QueryPerformanceCounter

I've written some timing routines far back for Windows CE, because the .NET timer routines does not work very well on Pocket PC and Smartphone (resolution is not good). QueryPerformanceCounter and QueryPerformanceFrequency are the WINAPI methods of reading the OEM high resolution counter, which is the lowest level of time-taking on modern computers.

#region "Timer routines"

[DllImport("kernel32.dll")] static extern public bool QueryPerformanceCounter(out UInt64 i64);

[DllImport("kernel32.dll")] static extern public bool QueryPerformanceFrequency(out UInt64 i64);

static protected UInt64 i64Freq = 0;

public static UInt64 GetNowI64()

{

UInt64 i64;

if (!QueryPerformanceCounter(out i64))

i64 = 0;

return (i64 * 10000000) / GetFreq();

}

public static UInt64 GetFreq()

{

if (0 == i64Freq)

if (!QueryPerformanceFrequency(out i64Freq))

i64Freq = 1;

return i64Freq;

}

protected static DateTime dtBaseline = DateTime.MinValue;

protected static UInt64 i64Baseline = 0;

public static DateTime GetNowDt()

{

UInt64 i64Now = GetNowI64();

DateTime dt;

if (dtBaseline == DateTime.MinValue i64Baseline == 0 i64Baseline > i64Now)

{ // We have no reference point, or reference point is no longer sufficient: create one.

dtBaseline = DateTime.Now;

i64Baseline = i64Now;

dt = dtBaseline;

}

else

{ // We have a sufficient baseline, return baseline plus timespan for diff

dt = new DateTime(dtBaseline.Ticks + (Int64)(i64Now - i64Baseline));

}

return dt;

}

#endregion "Timer routines"

Converting these routines to SQL CLR is a non-brainer.

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlDateTime GetTime()

{

//return (SqlDateTime)DateTime.Now;

return (SqlDateTime)GetNowDt();

}

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlString GetTimeAsString()

{

return (SqlString)(GetNowDt().ToString("O"));

}

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlInt64 GetFrequency()

{

return (SqlInt64)(Int64)GetFreq();

}

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlInt64 GetTickCount()

{

UInt64 i64;

if (!QueryPerformanceCounter(out i64))

i64 = 0;

return (SqlInt64)(Int64)i64;

}

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlInt64 GetMs()

{

return (SqlInt64)(Int64)GetNowI64();

}

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlInt64 GetTicksPast(Int64 zero)

{

UInt64 uiRes = GetNowI64() - (UInt64)zero;

return (SqlInt64)(Int64)uiRes;

}

[Microsoft.SqlServer.Server.SqlFunction]

public static SqlInt64 GetMicrosPast(Int64 zero)

{

UInt64 uiRes = GetNowI64() / 10;

uiRes -= (UInt64)zero;

return (SqlInt64)(Int64)uiRes;

}

SQL Server import:

CREATE ASSEMBLY MeasureTime AUTHORIZATION [dbo] FROM ... WITH PERMISSION_SET = UNSAFE

CREATE FUNCTION Meta.GetFrequency() RETURNS bigint AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetFrequency

CREATE FUNCTION Meta.GetMicrosPast(@zero bigint) RETURNS bigint AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetMicrosPast

CREATE FUNCTION Meta.GetMs() RETURNS bigint AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetMs

CREATE FUNCTION Meta.GetTickCount() RETURNS bigint AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetTickCount

CREATE FUNCTION Meta.GetTicksPast(@zero bigint) RETURNS bigint AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetTicksPast

CREATE FUNCTION Meta.GetTime() RETURNS datetime AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetTime

CREATE FUNCTION Meta.GetTimeAsString() RETURNS nvarchar(MAX) AS EXTERNAL NAME MeasureTime.UserDefinedFunctions.GetTimeAsString

Testing with some simple queries shows me that it worked. (hope link to image is not broken)

Green arrows

These demonstrates that you can get a higher granularity on timestamps than using GetDate() with Meta.GetTime(). GetDate jumps from ...573 to ...587, while Meta.GetTime() jumps 2, 4 and 6 ms. Two other facts stands out: times differ, so .NET-time seams not equal to T-SQL time, update of 4ms means that the granularity of datetime can't be exactly 3ms.

Blue arrows

GetTicksPast() (10 000 000 pr sec) and GetMicrosPast() (1 000 000 pr sec) works as expected. Calling CLR, getting the time and putting it into a cell in temp looks to take less than 50 microseconds or less than 1/20000 second, which is faster than I expected (but we have very desent laptops).

Red arrows

Putting the same functions multiple times on the same SELECT ensured me that they are called every time (without caching). I particulary liked GetTimeAsString() which measured time in readable format exact with seven digits after secounds... higher granularity than GetDate(), no?

III) What's the business case

You tell me, I just needed to write som code and wanted to share the result.

IV) Where is the code

http://www.codeplex.com/SqlClrMeasureTime

this got kind of nerdy, next one will be better

have a nice week end

Gorm Braarvig

Thursday, November 24, 2005

Access database from SQL 2005/64

1) Install SQL Server Express 2005, 32-bit

2) Configure both SQL Servers to use distributed queries

3) Create a database with a stored procedure in SQL Server Express to handle OLEDB requests

4) Link up SQL Server Express to SQL Server

5) Test it

OK. Let’s get to the details…

1) Install SQL Server Express 2005, 32-bit

http://www.microsoft.com/sql/editions/express/default.mspx

2) Configure both SQL Servers to use distributed queries

On both servers, run:

sp_configure 'show advanced options', 1

go

reconfigure

go

sp_configure 'Ad Hoc Distributed Queries', 1

go

reconfigure

go

3) Create a database with a stored procedure in SQL Server Express to handle OLEDB requests

On SQL Server Express, run:

USE [master]

GO

IF NOT EXISTS (SELECT name FROM sys.databases WHERE name=N'oledb32')

CREATE DATABASE [oledb32] ON ( NAME=N'oledb32', FILENAME=N'C:\Program Files (x86)\Microsoft SQL Server\MSSQL.4\MSSQL\DATA\oledb32.mdf' , SIZE=3072KB , MAXSIZE=UNLIMITED, FILEGROWTH=1024KB )

LOG ON ( NAME=N'oledb32_log', FILENAME=N'C:\Program Files (x86)\Microsoft SQL Server\MSSQL.4\MSSQL\DATA\oledb32_log.ldf' , SIZE=1024KB , MAXSIZE=2048GB , FILEGROWTH=10%)

GO

USE [oledb32]

GO

IF NOT EXISTS (SELECT * FROM sys.schemas WHERE name=N'oledb32')

EXEC ('CREATE SCHEMA [oledb32] AUTHORIZATION [dbo]')

GO

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id=OBJECT_ID(N'[oledb32].[select_from_openrowset]') AND type in (N'P', N'PC'))

EXEC dbo.sp_executesql @statement=N'CREATE PROCEDURE [oledb32].[select_from_openrowset](

@select nvarchar(max)=''SELECT *'',

@provider nvarchar(max)=''Microsoft.Jet.OLEDB.4.0'',

@datasource nvarchar(max)='''',

@user_id nvarchar(max)=''Admin'',

@password nvarchar(max)='''',

@provider_string nvarchar(max)=NULL,

@query_or_object nvarchar(max)='''')

AS

BEGIN

SET NOCOUNT ON;

DECLARE @sql AS nvarchar(max)

SET @sql=@select +

'' FROM OPENROWSET('' +

'''''''' + @provider + '''''',''

IF NOT @provider_string IS NULL

SET @sql=@sql + '''''''' + @provider_string + '''''',''

ELSE

SET @sql=@sql + '''''''' + @datasource + '''''';'''''' + @user_id + '''''';'''''' + @password + '''''',''

SET @sql=@sql + @query_or_object + '')''

PRINT @sql

EXEC (@sql)

END

'

4) Link up SQL Server Express to SQL Server

On your 64-bit SQL Server, run:

sp_addlinkedserver @server='SqlOledb32', @srvproduct='', @provider='SQLNCLI', @datasrc='(local)\sqlexpress'

go

sp_serveroption 'SqlOledb32', 'rpc out', TRUE

go

5) Test it

On your 64-bit SQL Server, run:

SqlOledb32.oledb32.oledb32.select_from_openrowset @datasource='D:\some_valid_db_name.mdb',

@query_or_object='some_query_or_table'

I would like to explain the steps, but time is up.

Share and enjoy.

Wednesday, November 2, 2005

SQL XML (Re: SQL cursors: This was a funny one)

--------------------------------------------

Michael Rys:

You mean you could write the following simple expression with XML:

SELECT (SELECT * FROM Customers FOR XML AUTO, ELEMENTS, TYPE).query(

'for $c in /Customers

return

<Customers>{

for $p in $c/*

order by local-name($p)

return $p

}</Customers>'

)

:-)

eoMichael Rys:

--------------------------------------------

USE AdventureWorks

GO

CREATE PROCEDURE pXmlAllFieldsSorted(@tableName nvarchar(MAX))

AS

DECLARE @sql AS nvarchar(MAX)

SET @sql = 'SELECT (SELECT * FROM ' + @tableName + ' FOR XML AUTO, ELEMENTS, TYPE)' +

'.query(''for $c in /' + @tableName + ' return <' + @tableName +

'>{ for $p in $c/* order by local-name($p) return $p' +

'}</' + @tableName + '>'')'

PRINT @sql

EXEC (@sql)

GO

pXmlAllFieldsSorted 'HumanResources.Employee'

Saturday, October 29, 2005

-web-database-server OH YEAH database-web-server-

Heard about a web database server?

Or a database web server?

Take a look at mine, a mean machine search server (made for AJAX solutions).

Test bed for 32-bit version and a URI for searching for “microsoft” in Norway:

http://searchpalette.com/g/g.html

http://searchpalette.com/SPaletteGorm/0/25/microsoft

My 64-bit version searching from 10Million records getting page 25 of people called “Bil” with page size 25.

http://62.89.36.69/spalette/24/25/bil

Outputing an unbelievable 3151 bytes (scripts not included) for representing 25 persons with address and all phone numbers in a millisecond (before it gets to the router).

See it in AJAX action. Type “bil” at http://firmakatalogen.com/ and hit page 9. The 1400-1500 bytes needed to represent the hits should come more or less instantly after you let go of the mouse button. If not, it is a network latency problem.

The server, working on a XEON 64 takes up 50MB RAM for serving 5GB of raw data. I guess I could have a hundred of them on the same (single processor) machine. Integration is done with SQL Server 2005 (and has been done for about a year now in production, hope I am not breaking NDAs etc. etc. but it was the best solution, so I did it)

Well, my 64-bit webservices database server will be even more fun.

Thank you, Microsoft and Intel.

SQL cursors: This was a funny one

Is it possible to order the column names in alphabetical order in Sql Server?

This is a problem that in my view elightens how much you can’t do with SQL.

First thought is to use CLR.

Since I never use SQL OPEN CURSOR and FETCH the obvious took some time.

I am sure there is a more elegant solution using XML in some way, but I think XML gets overly complex at times, so I avoid using it if there are more practical solutions. Even if they don’t always look that elegant.

Here is my solution:

-- SQL to output table with columns sorted

CREATE PROCEDURE pTableWithSortedFields(@tableName nvarchar(MAX))

AS

DECLARE @sql as nvarchar(MAX)

SET @sql = 'SELECT '

DECLARE temporary_cursor CURSOR FOR

select name from sys.all_columns

where object_id = OBJECT_ID(@tableName)

order by name

DECLARE @field as nvarchar(MAX)

OPEN temporary_cursor

FETCH NEXT FROM temporary_cursor INTO @field

WHILE @@FETCH_STATUS = 0

BEGIN

SET @sql = @sql + '[' + @field + ']'

FETCH NEXT FROM temporary_cursor INTO @field

IF @@FETCH_STATUS = 0

SET @sql = @sql + ','

END

CLOSE temporary_cursor

DEALLOCATE temporary_cursor

SET @sql = @sql + ' FROM ' + @tableName

print @sql

EXEC (@sql)

GO

pTableWithSortedFields 'sometable'

-- SQL to output table with columns sorted

Well, answering posts (when I get the time) can be a learning experience. I am sure I will use cursors for something again soon, another tool is somewhat sharpened

Friday, October 21, 2005

Hardcore Programming (dis)cont

Hardcore Programming cont. will have to wait. I needed to put my AJAX database webservices server on 64-bit. Seems to work. HTTP.SYS was a real drag (with the newest platform SDK), so I had to do LoadLibrary and GetProcAddress on the lot. Hopefully it will pay itself at some point in the future.

Also, the SysWOW64 puzzled me a bit

Have a nice weekend

Yours, Gorm Braarvig

Sunday, October 16, 2005

Hardcore Programming cont.

New console application,

// stdafx.h

#include

// HelloConsoleWorld.cpp

int _tmain(int argc, _TCHAR* argv[]){

_tprintf(_T("Hello World")); ::MessageBox(NULL, _T("Hello MB World"), _T("MB World"), 0) ; return 0;}

dir:

debug - 40 960 HelloConsoleWorld.exe

release - 6 144 HelloConsoleWorld.exe

64bit debug - 31 232 HelloConsoleWorld.exe

64bit release - 7 168 HelloConsoleWorld.exe

64-bit versions are 1KB and 10KB larger. Fine.

Compiling to 64-bit was harder than I thought but creating two new configurations and compiling and linking all configurations I got what I wanted.

First looks at the images in a hex editor reveals that

- 64-bit version has something called _C_specific_handler, google'ing it gives me (as expected) nothing. Will I have problems getting rid of RTL?

- Both versions are compiled as UNICODE pr default. Nice.

Putting all 64-bit versions in ...\x64\... is nice, why not make a ...\x32\... too?

I am content with "Hello World" for now.

2. "DelayWithCancelMT"

New win32 app

Remove resource files, etc, all the code, replace with

#include "stdafx.h"

void APIENTRY _tMyWinMain(HINSTANCE, HINSTANCE, LPTSTR lpCmdLine, int nCmdShow)

{ ::MessageBox(NULL, _T("Hi"), _T("Hi"), 0);

UNREFERENCED_PARAMETER(lpCmdLine);

UNREFERENCED_PARAMETER(nCmdShow);}

How can I get this to be bellow 1KB exe?

There are many new things turned on in VS 2005 (that I don't know how to turn off).

I will continue tomorrow.

Okay, Windows 2003 SP1/64.

I am coming to get you.

1. "Hello 64 bit World." console

Should be a no-brainer

2. "Delay with cancel" multi-threaded

Without RTL of any kind.

Hope this is possible with the new compiler/linker

Destination release exe should be bellow 2KB

What kind of structure and segment alignments can I have?

What does it look like in the hex-editor?

If I get dependends.exe to run, will be interesting to see what dlls are needed to run a windows program

3. "malloc the hell out of the machine"

Application that uses more than 2GB continuos RAM.

Long lived dream of mine.

OK. time to start.

G.